In recent years, generative artificial intelligence (AI) has become a powerful tool, creating content that used to be made only by humans. As technology advances rapidly, people are excited about every big announcement, curious about who will take the lead.

We’ll let Gemini Advanced tell us what Google I/O is?

“Google I/O is Google’s annual developer conference where they showcase their latest technologies, products, and updates. It’s a platform for developers to learn, network, and get hands-on experience with Google’s tools and platforms.”

This year’s Google I/O did not disappoint when it came to large language model (LLM) announcements. The tech giant announced updates to its Gemini model family, highlighting advancements in AI performance, accessibility, and cost-effectiveness.

The AI revolution shows no signs of slowing down, and Google decided to play a leading role.

Google Gemini models

“Gemini is our most ambitious model yet, designed to be multimodal and highly efficient.” – Sundar Pichai, Google CEO

Gemini 1.5 Flash

Gemini 1.5 Flash was a key highlight of the event. This model is engineered for speed and efficiency, making it an ideal choice for high-volume, high-frequency tasks. Notably, it is the fastest Gemini model available through the API, providing a cost-effective alternative to the flagship Gemini 1.5 Pro while maintaining strong capabilities.

Developers can now access Gemini 1.5 Flash in public preview within Google’s AI Studio and Vertex AI, enabling them to experiment and build applications that demand rapid responses and scalability.

Gemini 1.5 Pro

Despite being launched in February, Gemini 1.5 Pro has already undergone significant upgrades, enhancing its performance in areas such as coding, translation, and more. These improvements are demonstrated by the model’s strong performance on benchmarks like MMMU, MathVista, ChartQA, DocVQA, and InfographicVQA.

To make advanced AI capabilities more accessible, Gemini 1.5 Pro, featuring its one million token context window, will be available to consumers through Gemini Advanced. This will enable users to leverage AI for tasks involving large volumes of information, such as analyzing lengthy PDFs or summarizing extensive research papers.

Additionally, Google is introducing a two-million token context window in both Gemini 1.5 Pro and Gemini 1.5 Flash. Developers can join a waitlist in Google AI Studio to access this feature.

Gemini Nano

Google is also expanding Gemini Nano, its on-device model for smartphones, to include image understanding in addition to text processing. This new feature, called Gemini Nano with Multimodality, aims to enhance AI-powered intelligence on mobile devices.

Initially available on Pixel phones, applications using this model will be able to process and respond to visual and auditory inputs, creating more intuitive and context-aware mobile experiences.

Gemma and PaliGemma

The Gemini model family includes not only the Gemini models but also the Gemma models, which are adapted for specific hardware architectures. Gemma 2, the next-generation version set to launch in June, features 27 billion parameters and is optimized for both TPUs (Tensor Processing Units) and GPUs (Graphics Processing Units). This upgraded model aims to enhance performance and efficiency for various AI tasks.

Joining the Gemma lineup is PaliGemma, Google’s first vision-language model. This model integrates visual and textual understanding, enabling new applications that require both types of input.

Gemini features

Unlike traditional AI models that focus on a single type of data, Gemini is designed to process and generate various kinds of data, including text, images, and code, which could lead to numerous applications across different fields. This versatility unlocks new possibilities for AI applications, enabling more natural and intuitive interactions between humans and machines.

For instance, Gemini can analyze an image and generate a detailed text description, or vice versa. This capability has potential applications in fields like medical imaging, where Gemini could help doctors identify and diagnose diseases by analyzing medical scans. In the creative realm, Gemini could empower artists and designers by generating images based on textual descriptions, or transforming images into different artistic styles.

Gemini’s multimodal prowess is further enhanced by the availability of two distinct versions: Gemini Pro and Gemini Advanced.

- Gemini Pro, designed for professional users, offers advanced capabilities for complex tasks such as generating high-quality images, analyzing large datasets, and developing sophisticated AI applications.

- Gemini Advanced, on the other hand, is tailored for researchers and developers who require the most cutting-edge AI capabilities. It offers access to the latest research models and tools, enabling experimentation with AI.

The power of a 1 million token context window

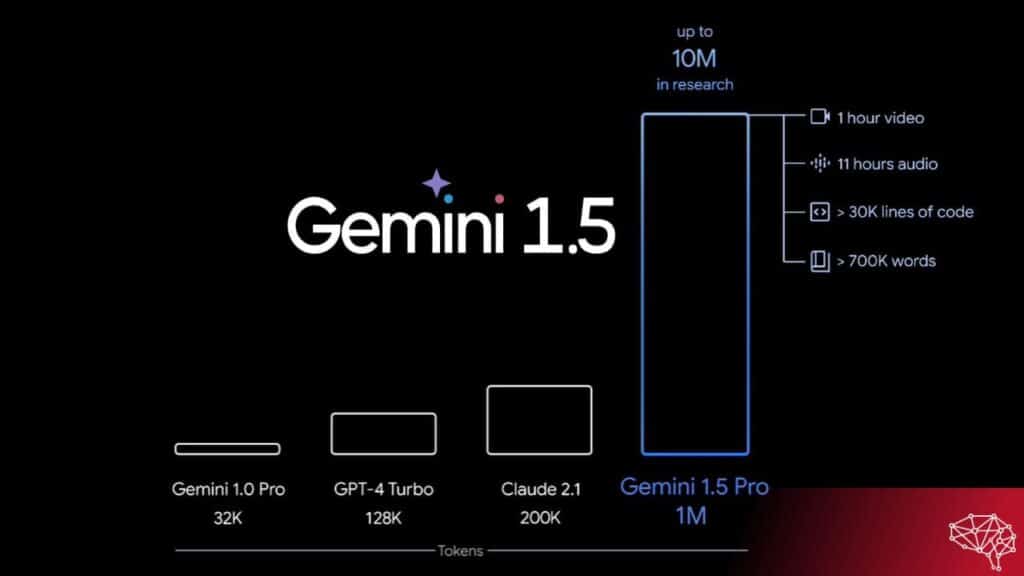

One of Gemini’s most notable features is its ability to process a context window of one million tokens, amplifying its multimodal capabilities. To understand this, consider that a token is roughly equivalent to a word or part of a word. This means Gemini can effectively “read” and understand the equivalent of several novels or a small library of information with a single prompt.

Compared to existing AI models, such as OpenAI’s GPT-4, Gemini’s 1 million token context window sets a new standard. While GPT-4 has an impressive 32,000 token context window in its most advanced version, Gemini’s ability to process one million tokens places it in a different category.

The implications of this expanded context window are substantial. It enhances Gemini’s ability to understand the nuances and details of complex queries. When faced with a lengthy prompt or a series of interconnected questions, Gemini can use a vast amount of contextual information to provide more accurate and relevant responses. This is especially useful in situations where understanding the broader context is crucial, such as summarizing lengthy documents, analyzing codebases, or engaging in extended conversations.

Additionally, the 1M token context window creates opportunities for innovative AI applications. For instance, a chatbot could recall entire conversation histories, providing personalized and contextually aware responses. A code assistant could understand the details of a large software project, offering intelligent suggestions and identifying potential errors.

This expanded capacity enables users to engage in more comprehensive interactions with Gemini, allowing it to process and retain information from extensive documents or conversations.

For instance, users can now upload documents up to 1,500 pages long or a collection of 100 emails for analysis, summarization, or extraction of key insights. Google is also extending the capabilities to process an hour of video and codebases with up to 30,000 lines.

Gemini Live

Google’s introduction of Gemini Live, a new mobile experience, allows users to engage in natural, flowing conversations with Gemini. Offering a choice of realistic-sounding voices and the ability to interrupt or redirect the conversation, Gemini Live provides a more intuitive and interactive way to interact with AI.

This technology draws from Project Astra, a Google DeepMind initiative that aims to redefine the future of AI assistants by incorporating real-world context into conversations. For example, a user could point their camera at a building and ask Gemini to identify it, opening up possibilities for location-based information and assistance.

Gems for Gemini

Taking inspiration from OpenAI’s ChatGPT plugins, Google introduced Gems for Gemini, allowing users to customize Gemini to cater to specific needs and preferences. By providing instructions on the desired task, users can create personalized “Gems” that tailor Gemini’s responses to their unique requirements.

Transforming Google’s products with Gemini

Gemini’s ability to handle different types of data and its large context window are set to transform all Google products, from how we search for information to how we create and work together.

Google search

One of Gemini’s most anticipated impacts is on Google Search. Currently, search engines primarily rely on matching keywords in queries with relevant web pages. However, with Gemini’s ability to understand the meaning behind complex queries and summarize information from multiple sources, search results could become far more comprehensive and informative.

For example, instead of a list of links, a query about a complex scientific concept could return a concise, well-structured summary drawn from the most relevant sources. Gemini’s multimodal capabilities extend this further, enabling search engines to understand and respond to queries that include not just text, but also images, videos, or even combinations of modalities. This opens up new possibilities for visual search, allowing users to find information based on visual cues or by combining different types of input.

By understanding both language and context, Gemini will enable Google Search to deliver more personalized and relevant results. Taking into account search history, preferences, and the current context, Gemini can tailor search results to meet individual needs and interests, moving beyond a one-size-fits-all approach.

Google workspace

In Google Workspace, Gemini could serve as a valuable tool for productivity enhancement. By automating routine tasks, generating creative content, and offering insightful suggestions, Gemini has the potential to streamline workflows and empower users.

Smarter Emails with Gemini

Gmail users will experience an upgrade with the integration of Gemini 1.5 Pro into the side panel. The expanded context window and advanced reasoning capabilities of this model allow for enhanced assistance within Gmail.

This upgrade is currently available to Workspace Labs and Gemini for Workspace Alpha users, with wider availability to Workspace add-on and Google One AI Premium Plan users expected next month on desktop.

In addition, Gmail for mobile will receive three new helpful features:

- Summarize

- Gmail Q&A

- Contextual Smart Reply.

The Summarize feature, as the name suggests, utilizes Gemini to provide concise summaries of email threads, saving users time and effort.

The Gmail Q&A feature enables users to chat directly with Gemini within the Gmail mobile app, allowing for contextualized queries about specific emails or conversations.

Finally, Contextual Smart Reply leverages both the email thread and Gemini chat history to generate more accurate and relevant auto-reply suggestions.

Google Docs, Drive, Slides, and Sheets

The integration of Gemini 1.5 Pro into the side panel of Google Docs, Drive, Slides, and Sheets empowers users with advanced AI assistance for various tasks. In Google Docs, Gemini can help refine writing, generate creative prompts, or even complete sentences and paragraphs. In Drive, Gemini can analyze and summarize documents, extracting key insights and facilitating quick information retrieval.

Furthermore, in Slides, Gemini can assist with content generation, slide design, and presentation optimization. In Google Sheets, Gemini can analyze complex data sets, generate insightful reports, and even create charts and graphs to visualize data effectively.

Personalized Planning and Integration

Gemini’s capabilities extend beyond productivity tools. The upcoming “Help Me Write” feature in Gmail and Docs will support Spanish and Portuguese, broadening its reach to a wider user base. A new planning experience within Gemini Advanced will also enable users to create detailed plans that consider their preferences.

Furthermore, Gemini will integrate with other Google applications like Calendar, Tasks, and Keep, allowing it to perform actions within those apps based on user requests or contextual information.

Creativity and collaboration

Google’s Gemini AI models hold immense potential for transforming creativity and collaboration within the company’s product suite.

Google Photos

In Google Photos, Gemini could change how users interact with their visual memories. Imagine effortlessly searching through large photo libraries using natural language queries like “find pictures of my dog at the beach.”

Gemini could also analyze photos to create captions or even tell personalized stories based on the pictures, making remembering more enjoyable and meaningful.

Additionally, Gemini’s ability to understand and create images could help with features like automatic photo editing. This would let users describe changes they want in their photos, and the AI model would make those changes accurately.

Google Arts & Culture

By leveraging its understanding of art, history, and culture, Gemini could offer personalized recommendations and insights to users, tailoring the experience to their unique interests and preferences.

Gemini could also help bridge cultural divides by translating artwork descriptions and historical texts into multiple languages, making cultural heritage more accessible to a global audience.

Google Meet

In the realm of collaboration, Gemini could significantly enhance the Google Meet experience. Its real-time translation capabilities could facilitate communication between participants who speak different languages.

Additionally, Gemini’s transcription services could provide accurate and accessible records of meetings, benefiting participants with hearing impairments or those who prefer to review discussions later. Further, Gemini could analyze meeting content to generate summaries, action items, and even follow-up emails.

Google cloud

Google cloud provides a comprehensive ecosystem of developer resources to support the development and deployment of AI applications. The Google AI Platform offers a unified environment for building, training, and deploying machine learning models. It includes tools for data preparation, model training, and model deployment, simplifying the process for developers to build and scale AI applications.

Additionally, Google cloud offers various APIs and pre-trained models that developers can integrate into their applications. For example, the cloud Natural Language API analyzes text for sentiment, entities, and syntax, while the cloud Vision API analyzes images for objects, faces, and text. These APIs and models streamline the development process by saving developers time and effort.

With Gemini’s launch, Google cloud expands its AI toolkit even further. Developers can now leverage Gemini’s multimodal capabilities and vast context window to build innovative applications that can understand and generate text, images, and code.

Vertex AI

Vertex AI is Google cloud’s unified AI platform, designed to streamline machine learning workflows and facilitate the development, deployment, and management of AI models. It offers a suite of tools and services, including data preparation, feature engineering, model training, model evaluation, and model deployment.

Vertex AI automates many of the repetitive and time-consuming tasks associated with machine learning workflows, allowing developers to focus on strategic aspects such as model design and result analysis. It also provides a range of pre-trained models for quick starts and options for custom model training for specialized applications.

At Google I/O 2024, several new features were announced for Vertex AI, including tools for data labeling, model explainability, and model monitoring, further enhancing its capabilities.

Cloud competition

The AI cloud market is a competitive area where big tech companies like Google, Microsoft, and Amazon are competing for prominence. Each company has its own strengths and faces unique challenges in the AI field.

Microsoft Azure

Microsoft Azure has a long-standing reputation as an enterprise-grade cloud platform, offering a wide range of AI tools and services customized to meet the needs of businesses. Azure’s strengths include its robust infrastructure, enterprise-grade security, and extensive partner ecosystem. It also boasts strong integration with Microsoft’s popular productivity tools like Office 365 and Dynamics 365.

However, Azure’s AI offerings are sometimes perceived as being less innovative than Google cloud’s. While Azure offers a range of pre-trained models and APIs, it may not be as well-suited for cutting-edge AI research and development.

Amazon Web Services (AWS)

Amazon Web Services (AWS) is a frontrunner in cloud computing, boasting the largest market share and an extensive range of services. AWS offers a comprehensive suite of AI tools and services, including pre-trained models, APIs, and machine learning frameworks. Its strengths lie in its scalability, reliability, and global reach.

Still, AWS’s AI offerings can be complex and may require significant technical expertise to implement. This can be a barrier for smaller businesses or developers with limited resources.

Google’s strengths and areas for improvement

Google cloud’s strengths lie in its innovative AI models, user-friendly tools, and seamless integration with other Google products and services.

However, despite the immense potential of Gemini models, they have some drawbacks. Like other large language models, Gemini can sometimes struggle with factuality and accuracy, occasionally generating responses that are misleading or incorrect, especially with complex topics. The huge amount of data used to train Gemini can inadvertently introduce biases, potentially leading to discriminatory or unfair outcomes.

Additionally, the complexity of these models makes their inner workings difficult to interpret, raising concerns about transparency and explainability. The significant computational resources required to train and run such large models also raise concerns about their environmental impact. Moreover, the potential for misuse, such as generating harmful content or spreading misinformation, underscores the need for robust safety measures and ethical guidelines in the development and deployment of Gemini models.

To maintain its competitive edge, Google cloud needs to continue investing in AI research and development, expand its enterprise offerings, and build strategic partnerships with key players in various industries. The company also needs to address concerns about pricing and complexity, making its AI tools and services more accessible to a wider range of users.

Google’s AI vision

Google’s ambitions in the field of AI extend far beyond the development of powerful models like Gemini. The company imagines a future where AI is seamlessly integrated into its products and services, making them better and solving real-world problems in innovative ways. This vision positions AI not as a mere technological novelty, but as a fundamental tool for improving how we interact with the digital world and solve complex challenges.

At the core of this vision is a commitment to “AI for everyone.” Google wants to make AI easy to use and helpful for people, businesses and communities around the world. This means not just making fancy AI models like Gemini, but also making tools and platforms that let developers and businesses use AI easily.

Gemini, with its multimodal capabilities and vast context window, is a key part of Google’s AI strategy. It serves as a versatile engine, capable of powering a wide array of AI-driven applications. From enhancing search results with more comprehensive and nuanced answers to revolutionizing creative workflows with AI-generated content, Gemini’s potential impact on Google’s product ecosystem is immense.

In the realm of search, Gemini could transform how we find and interact with information. Imagine a search engine that can understand complex queries, summarize information from multiple sources, and even generate creative content in response to your prompts.

Beyond search, Gemini could revolutionize how we work and collaborate. In Google Workspace, it could automate repetitive tasks, generate personalized insights, and facilitate seamless communication across different languages and modalities. It could also power intelligent chatbots and virtual assistants, capable of handling complex customer inquiries and providing tailored support.

Google’s AI vision extends even further, encompassing healthcare, education, and environmental sustainability. In healthcare, AI could be used to analyze medical images, identify patterns in patient data, and accelerate drug discovery. In education, it could personalize learning experiences, provide real-time feedback, and make educational resources more accessible. In environmental sustainability, AI could help optimize energy consumption, monitor deforestation, and predict natural disasters.

Google’s ethical principles

The development of powerful AI models like Gemini raises significant ethical considerations, and Google acknowledges these complexities. The company has consistently emphasized its commitment to responsible AI development, recognizing the potential for both positive and negative impacts on society. Google’s approach is rooted in a set of AI Principles that guide the development and deployment of its AI technologies.

Transparency is a cornerstone of Google’s responsible AI approach. The company aims to be open and communicative about how it’s AI models are built, trained, and used. This includes publishing research papers, sharing datasets, and engaging in dialogue with the broader AI community.

Fairness is another important part of Google’s AI Principles. The company knows that AI models can accidentally keep biases from the data they learn from.To address this, Google is actively working to develop techniques for identifying and mitigating bias in its AI systems. This includes diversifying training data, auditing models for fairness, and engaging with diverse stakeholders to ensure that AI systems are designed and used in an equitable manner.

User safety is paramount in Google’s AI development efforts. The company is committed to building AI systems that are safe, reliable, and secure. This includes taking measures to prevent AI from being used for malicious purposes, such as generating harmful content or spreading misinformation. Google also recognizes the importance of giving users control over their AI interactions, giving them tools to understand and manage how their data is used.

To ensure its AI efforts align with ethical standards, Google has established an AI Principles board composed of experts in various fields, including ethics, law, social science, and technology. The board provides guidance and oversight to Google’s AI teams, helping to ensure that the company’s AI development practices are aligned with its stated values and principles.